Improve healthcare services through patient 360: A zero-ETL approach to enable near real-time data analytics

AWS Big Data

MARCH 27, 2024

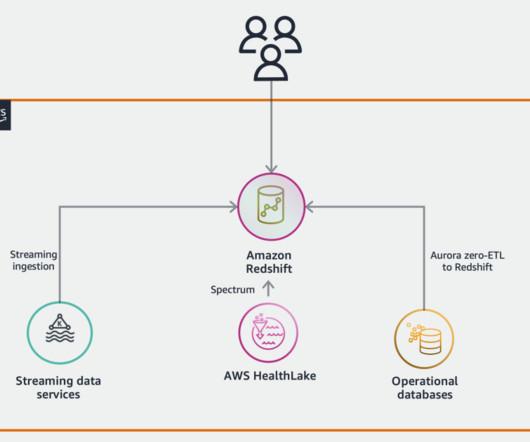

AWS has invested in a zero-ETL (extract, transform, and load) future so that builders can focus more on creating value from data, instead of having to spend time preparing data for analysis. You can send data from your streaming source to this resource for ingesting the data into a Redshift data warehouse.

Let's personalize your content