Sure, Trust Your Data… Until It Breaks Everything: How Automated Data Lineage Saves the Day

Octopai

JUNE 9, 2024

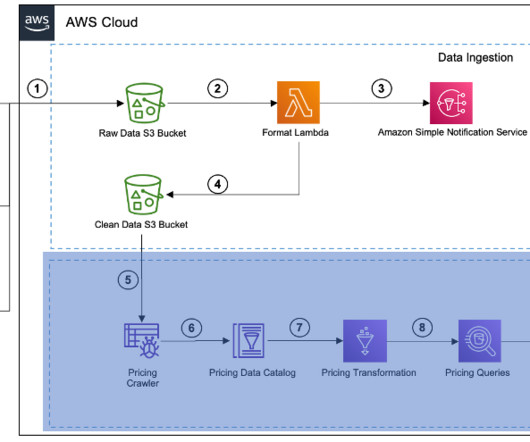

By doing so, they aimed to drive innovation, optimize operations, and enhance patient care. They invested heavily in data infrastructure and hired a talented team of data scientists and analysts. Solving the data lineage problem directly supported their data products by ensuring data integrity and reliability.

Let's personalize your content