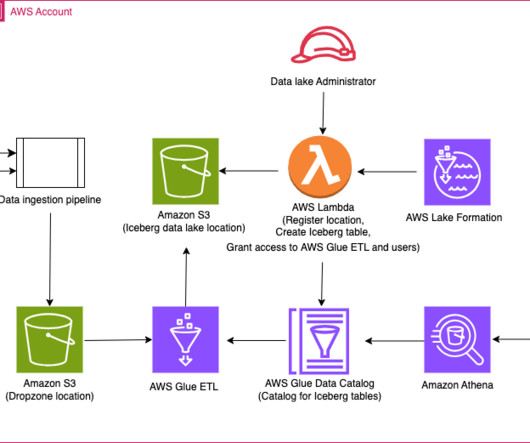

Use Apache Iceberg in your data lake with Amazon S3, AWS Glue, and Snowflake

AWS Big Data

APRIL 3, 2024

Businesses are constantly evolving, and data leaders are challenged every day to meet new requirements. For many enterprises and large organizations, it is not feasible to have one processing engine or tool to deal with the various business requirements. This post is co-written with Andries Engelbrecht and Scott Teal from Snowflake.

Let's personalize your content