Small Businesses Use Big Data to Offset Risk During Economic Uncertainty

Smart Data Collective

MARCH 2, 2023

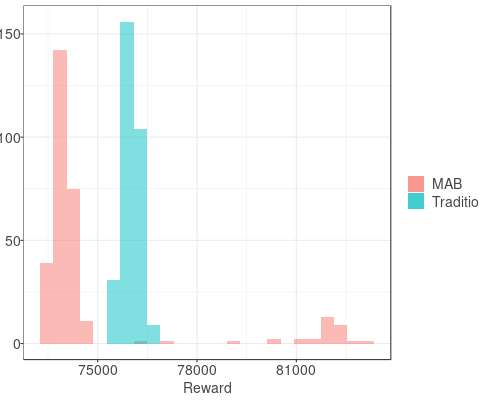

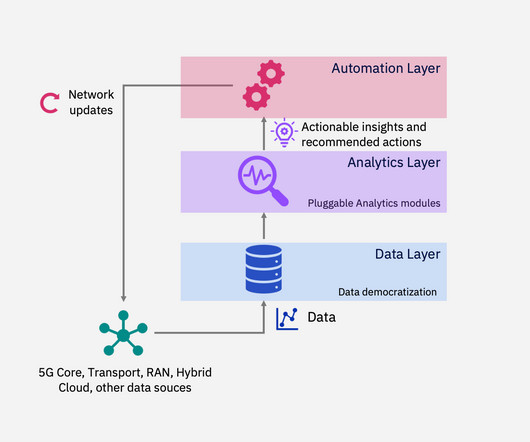

Big data technology used to be a luxury for small business owners. In 2023, big data Is no longer a luxury. One survey from March 2020 showed that 67% of small businesses spend at least $10,000 every year on data analytics technology. Patil and other experts argue that big data can help them with this.

Let's personalize your content