FPGA vs. GPU: Which is better for deep learning?

IBM Big Data Hub

MAY 10, 2024

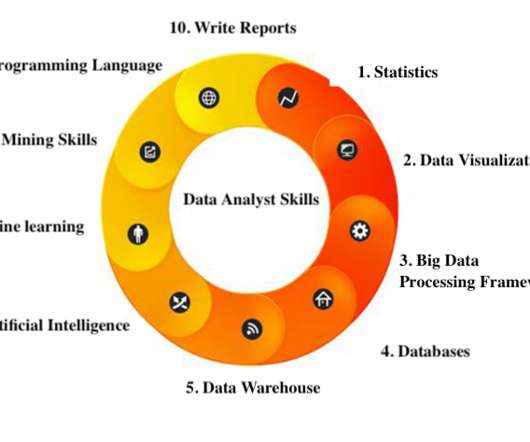

Underpinning most artificial intelligence (AI) deep learning is a subset of machine learning that uses multi-layered neural networks to simulate the complex decision-making power of the human brain. Deep learning requires a tremendous amount of computing power.

Let's personalize your content