DS Smith sets a single-cloud agenda for sustainability

CIO Business Intelligence

DECEMBER 6, 2023

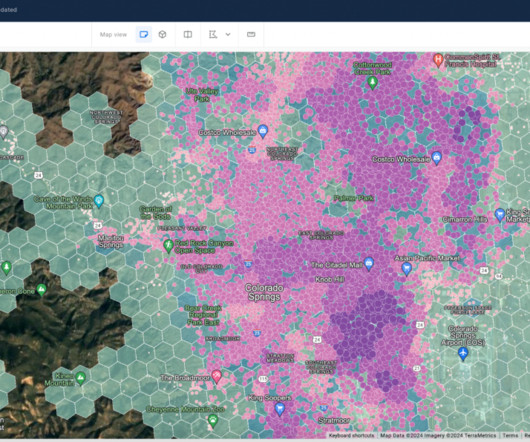

The migration, still in its early stages, is being designed to benefit from the learned efficiencies, proven sustainability strategies, and advances in data and analytics on the AWS platform over the past decade.

Let's personalize your content