Use Amazon Athena with Spark SQL for your open-source transactional table formats

AWS Big Data

JANUARY 24, 2024

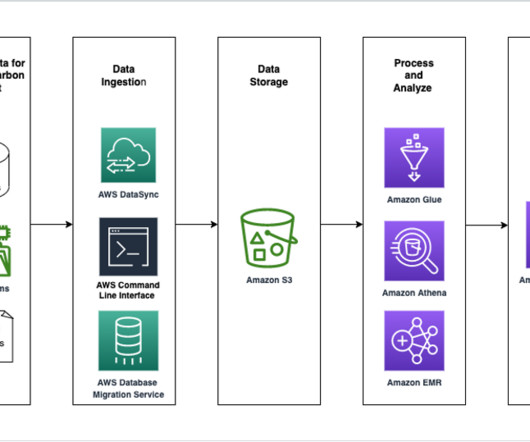

AWS-powered data lakes, supported by the unmatched availability of Amazon Simple Storage Service (Amazon S3), can handle the scale, agility, and flexibility required to combine different data and analytics approaches. It will never remove files that are still required by a non-expired snapshot.

Let's personalize your content