Orca Security’s journey to a petabyte-scale data lake with Apache Iceberg and AWS Analytics

AWS Big Data

JULY 20, 2023

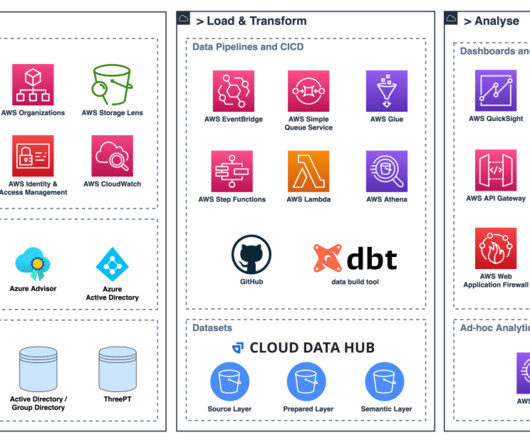

With data becoming the driving force behind many industries today, having a modern data architecture is pivotal for organizations to be successful. This ensures that the data is suitable for training purposes. These robust capabilities ensure that data within the data lake remains accurate, consistent, and reliable.

Let's personalize your content