Avoid generative AI malaise to innovate and build business value

CIO Business Intelligence

APRIL 1, 2024

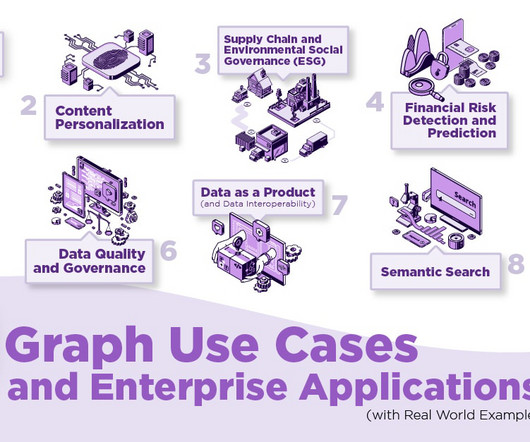

Capturing the “as-is” state of your environment, you’ll develop topology diagrams and document information on your technical systems. GenAI requires high-quality data. Ensure that data is cleansed, consistent, and centrally stored, ideally in a data lake. Assess your readiness.

Let's personalize your content