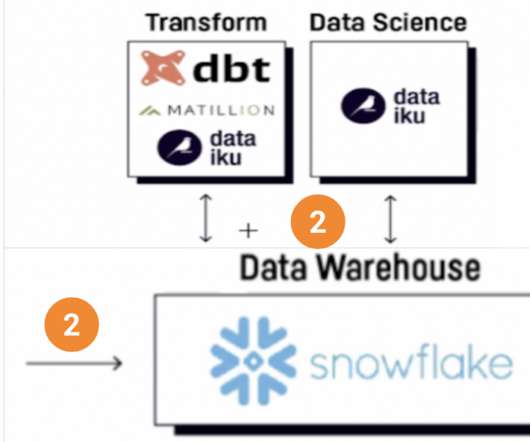

The Data Warehouse is Dead, Long Live the Data Warehouse, Part I

Data Virtualization

OCTOBER 18, 2022

The post The Data Warehouse is Dead, Long Live the Data Warehouse, Part I appeared first on Data Virtualization blog - Data Integration and Modern Data Management Articles, Analysis and Information. In times of potentially troublesome change, the apparent paradox and inner poetry of these.

Let's personalize your content