Data governance in the age of generative AI

AWS Big Data

FEBRUARY 29, 2024

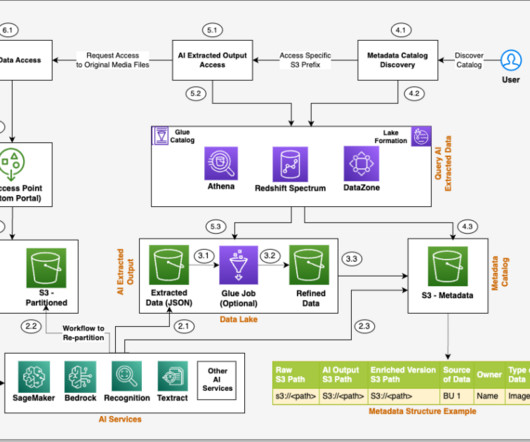

First, many LLM use cases rely on enterprise knowledge that needs to be drawn from unstructured data such as documents, transcripts, and images, in addition to structured data from data warehouses. As part of the transformation, the objects need to be treated to ensure data privacy (for example, PII redaction).

Let's personalize your content