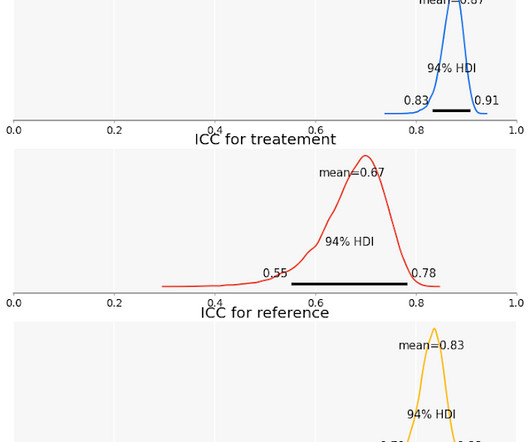

Measuring Validity and Reliability of Human Ratings

The Unofficial Google Data Science Blog

JULY 18, 2023

E ven after we account for disagreement, human ratings may not measure exactly what we want to measure. How do we think about the quality of human ratings, and how do we quantify our understanding is the subject of this post. While human-labeled data is critical to many important applications, it also brings many challenges.

Let's personalize your content