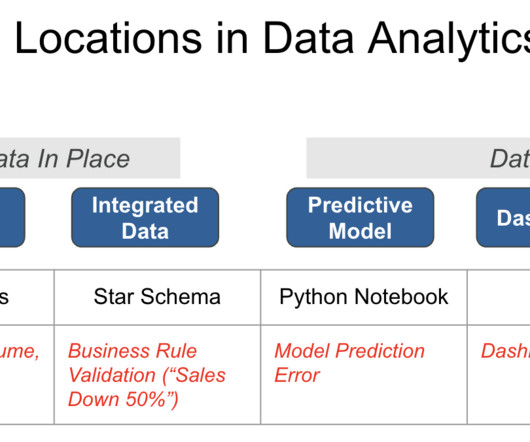

Bridging the Gap: How ‘Data in Place’ and ‘Data in Use’ Define Complete Data Observability

DataKitchen

SEPTEMBER 21, 2023

Bridging the Gap: How ‘Data in Place’ and ‘Data in Use’ Define Complete Data Observability In a world where 97% of data engineers report burnout and crisis mode seems to be the default setting for data teams, a Zen-like calm feels like an unattainable dream.

Let's personalize your content