Introducing Cloudera DataFlow Designer: Self-service, No-Code Dataflow Design

Cloudera

DECEMBER 9, 2022

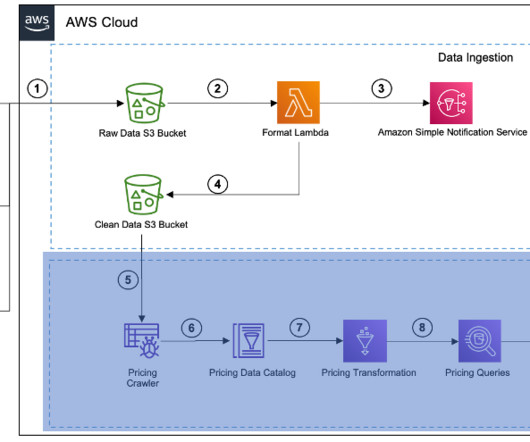

Developers need to onboard new data sources, chain multiple data transformation steps together, and explore data as it travels through the flow. A reimagined visual editor to boost developer productivity and enable self service. Interactivity when needed while saving costs.

Let's personalize your content