Measuring Incrementality: Controlled Experiments to the Rescue!

Occam's Razor

SEPTEMBER 19, 2011

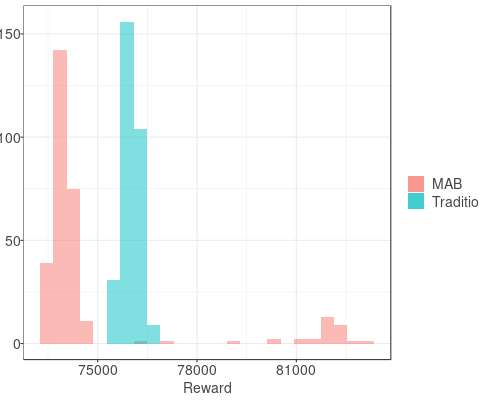

This: You understand all the environmental variables currently in play, you carefully choose more than one group of "like type" subjects, you expose them to a different mix of media, measure differences in outcomes, prove / disprove your hypothesis (DO FACEBOOK NOW!!!), The nice thing is that you can also test that!

Let's personalize your content