Data Warehouses: Basic Concepts for data enthusiasts

Analytics Vidhya

SEPTEMBER 13, 2022

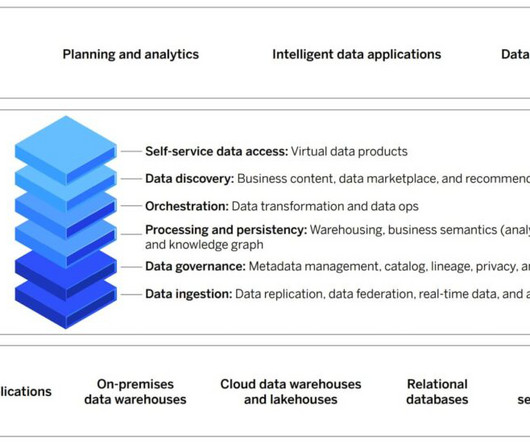

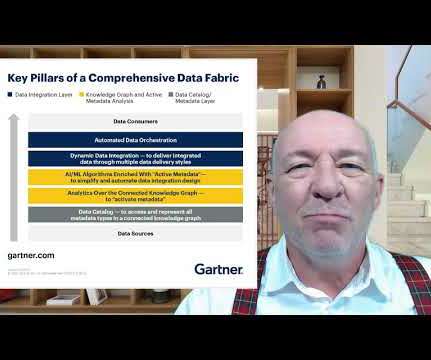

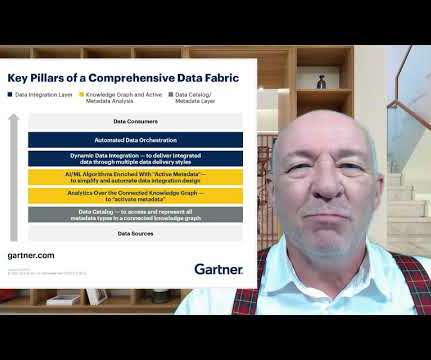

This article was published as a part of the Data Science Blogathon. Introduction The purpose of a data warehouse is to combine multiple sources to generate different insights that help companies make better decisions and forecasting. It consists of historical and commutative data from single or multiple sources.

Let's personalize your content