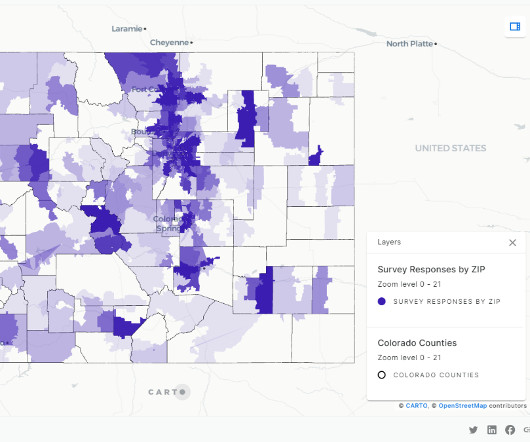

How HR&A uses Amazon Redshift spatial analytics on Amazon Redshift Serverless to measure digital equity in states across the US

AWS Big Data

DECEMBER 5, 2023

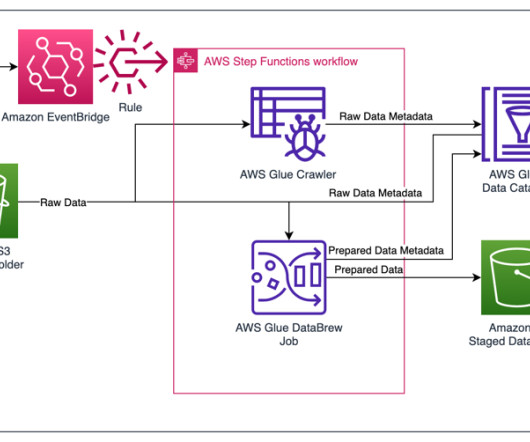

For files with known structures, a Redshift stored procedure is used, which takes the file location and table name as parameters and runs a COPY command to load the raw data into corresponding Redshift tables. He has more than 13 years of experience with designing and implementing large scale Big Data and Analytics solutions.

Let's personalize your content