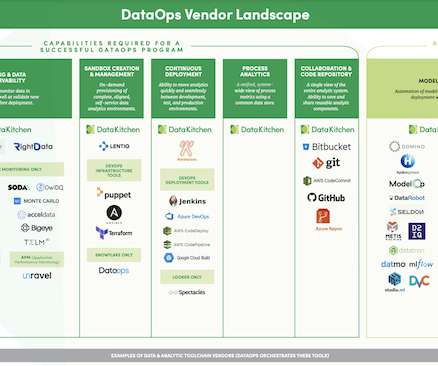

The DataOps Vendor Landscape, 2021

DataKitchen

APRIL 13, 2021

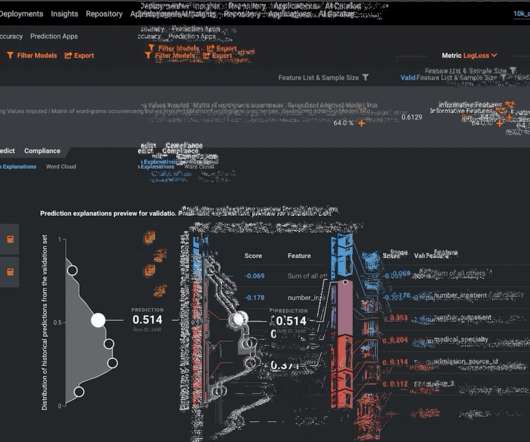

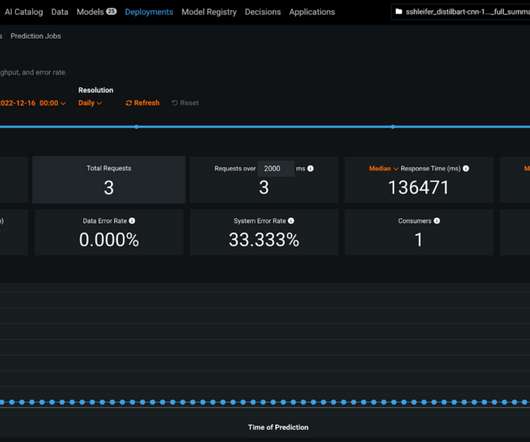

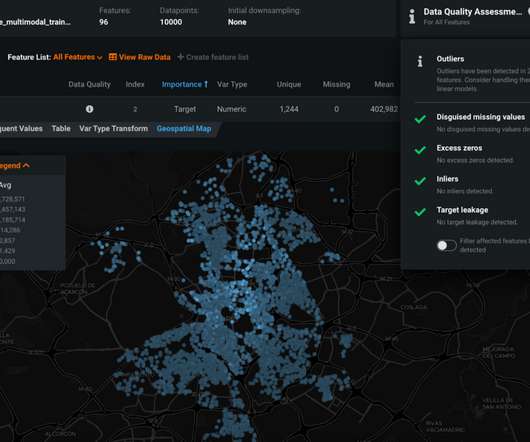

Read the complete blog below for a more detailed description of the vendors and their capabilities. Testing and Data Observability. It orchestrates complex pipelines, toolchains, and tests across teams, locations, and data centers. Testing and Data Observability. Production Monitoring and Development Testing.

Let's personalize your content