Texas Rangers data transformation modernizes stadium operations

CIO Business Intelligence

OCTOBER 18, 2022

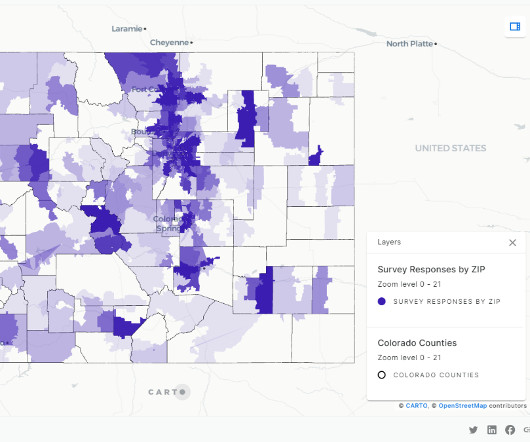

Driving better fan experiences with data. Noel had already established a relationship with consulting firm Resultant through a smaller data visualization project. Resultant then provided the business operations team with a set of recommendations for going forward, which the Rangers implemented with the consulting firm’s help.

Let's personalize your content