Enable data analytics with Talend and Amazon Redshift Serverless

AWS Big Data

JULY 25, 2023

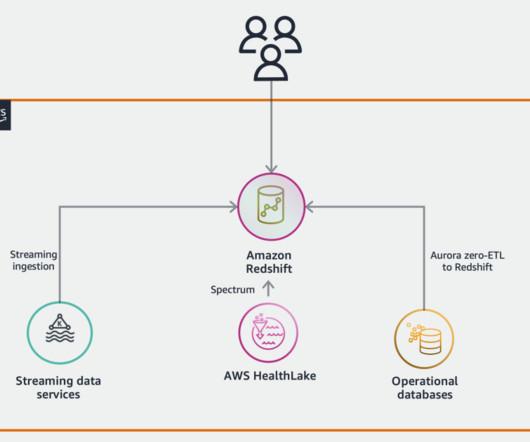

Today, in order to accelerate and scale data analytics, companies are looking for an approach to minimize infrastructure management and predict computing needs for different types of workloads, including spikes and ad hoc analytics. Prerequisites To complete the integration, you need a Redshift Serverless data warehouse.

Let's personalize your content