Measure performance of AWS Glue Data Quality for ETL pipelines

AWS Big Data

MARCH 12, 2024

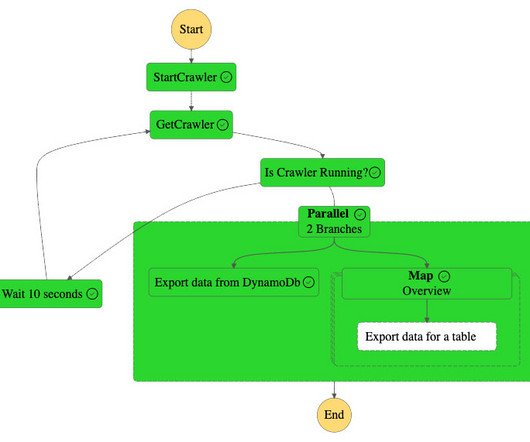

In recent years, data lakes have become a mainstream architecture, and data quality validation is a critical factor to improve the reusability and consistency of the data. In this post, we provide benchmark results of running increasingly complex data quality rulesets over a predefined test dataset.

Let's personalize your content