The Top Three Entangled Trends in Data Architectures: Data Mesh, Data Fabric, and Hybrid Architectures

Cloudera

SEPTEMBER 29, 2022

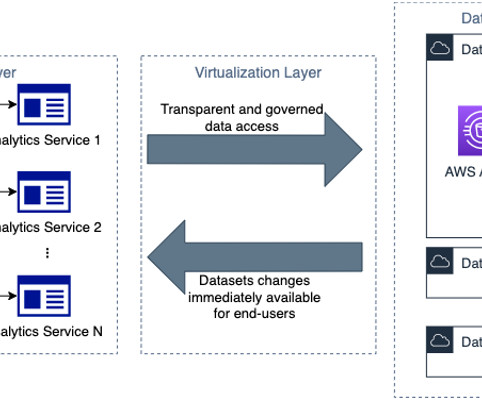

Each of these trends claim to be complete models for their data architectures to solve the “everything everywhere all at once” problem. Data teams are confused as to whether they should get on the bandwagon of just one of these trends or pick a combination. The data itself is then treated as a product.

Let's personalize your content