Data Engineering – A Journal with Pragmatic Blueprint

Analytics Vidhya

JUNE 23, 2022

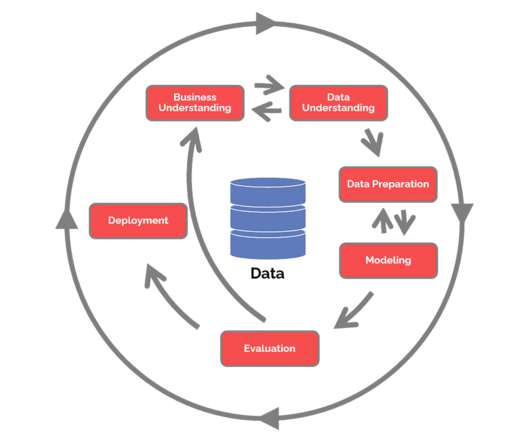

This article was published as a part of the Data Science Blogathon. Introduction to Data Engineering In recent days the consignment of data produced from innumerable sources is drastically increasing day-to-day. So, processing and storing of these data has also become highly strenuous.

Let's personalize your content