Towards optimal experimentation in online systems

The Unofficial Google Data Science Blog

APRIL 23, 2024

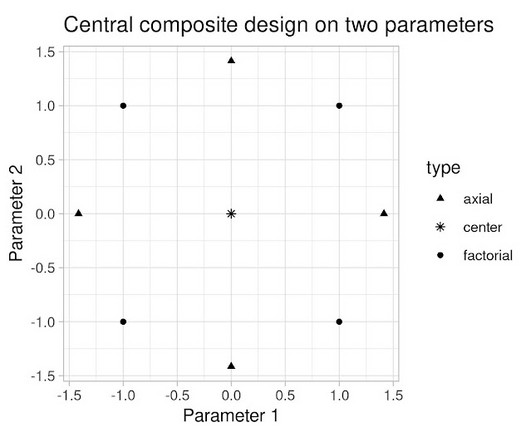

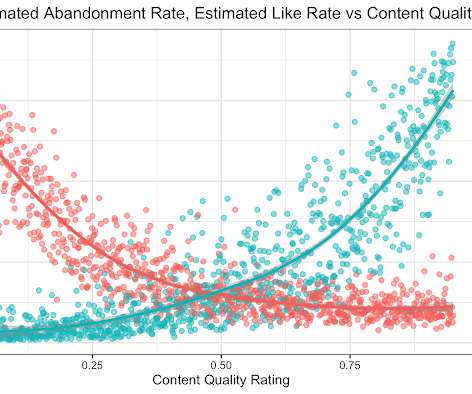

the weight given to Likes in our video recommendation algorithm) while $Y$ is a vector of outcome measures such as different metrics of user experience (e.g., Crucially, it takes into account the uncertainty inherent in our experiments. Figure 2: Spreading measurements out makes estimates of model (slope of line) more accurate.

Let's personalize your content