Introducing blueprint discovery and other UI enhancements for Amazon OpenSearch Ingestion

AWS Big Data

MAY 22, 2024

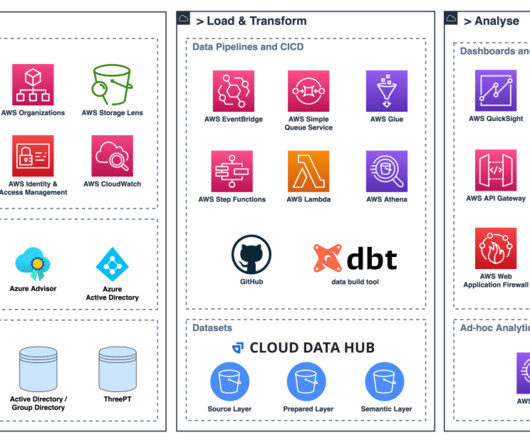

OpenSearch Ingestion is capable of ingesting data from a wide variety of sources and has a rich ecosystem of built-in processors to take care of your most complex data transformation needs. He is deeply passionate about Data Architecture and helps customers build analytics solutions at scale on AWS.

Let's personalize your content