Build a serverless transactional data lake with Apache Iceberg, Amazon EMR Serverless, and Amazon Athena

AWS Big Data

MARCH 10, 2023

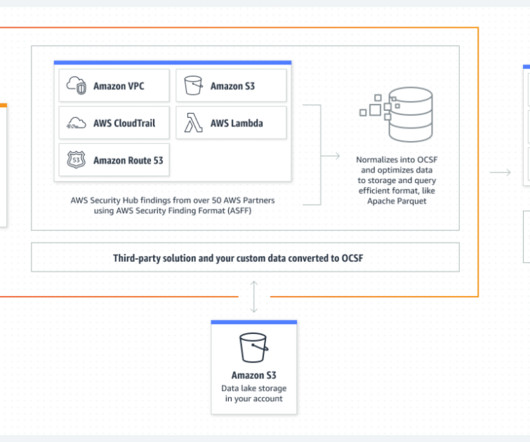

Since the deluge of big data over a decade ago, many organizations have learned to build applications to process and analyze petabytes of data. Data lakes have served as a central repository to store structured and unstructured data at any scale and in various formats.

Let's personalize your content