Simplify operational data processing in data lakes using AWS Glue and Apache Hudi

AWS Big Data

SEPTEMBER 13, 2023

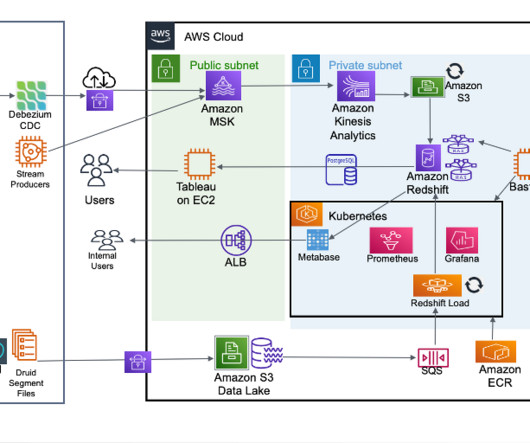

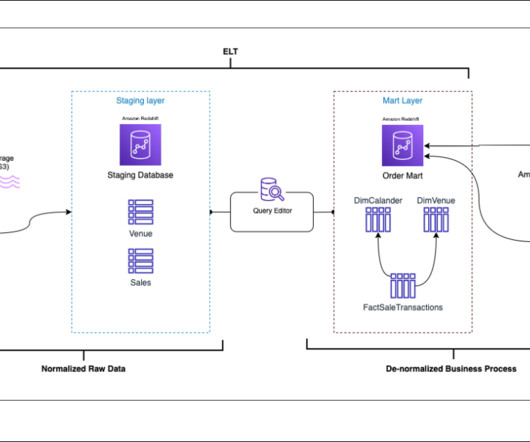

A modern data architecture is an evolutionary architecture pattern designed to integrate a data lake, data warehouse, and purpose-built stores with a unified governance model. The company wanted the ability to continue processing operational data in the secondary Region in the rare event of primary Region failure.

Let's personalize your content