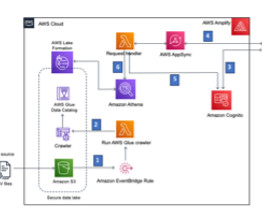

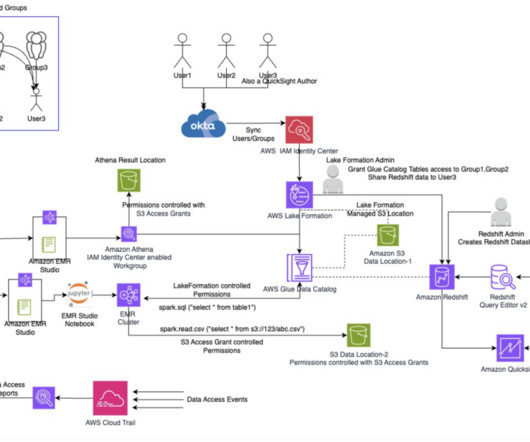

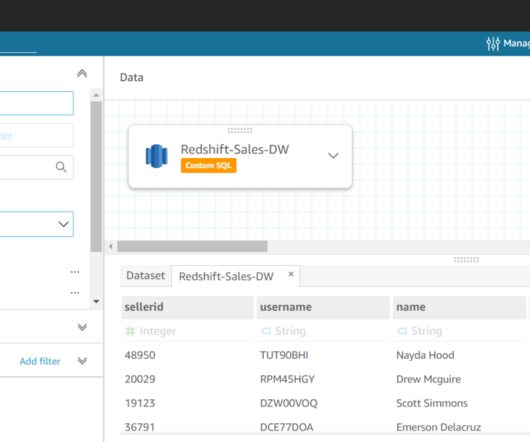

Enable business users to analyze large datasets in your data lake with Amazon QuickSight

AWS Big Data

JUNE 23, 2023

Imperva Cloud WAF protects hundreds of thousands of websites and blocks billions of security events every day. Events and many other security data types are stored in Imperva’s Threat Research Multi-Region data lake. Imperva harnesses data to improve their business outcomes.

Let's personalize your content