Create a modern data platform using the Data Build Tool (dbt) in the AWS Cloud

AWS Big Data

NOVEMBER 9, 2023

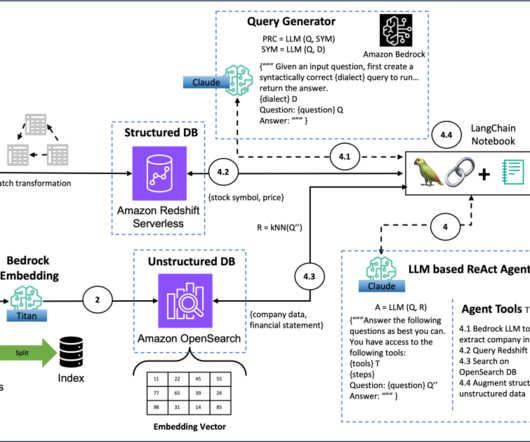

A modern data platform entails maintaining data across multiple layers, targeting diverse platform capabilities like high performance, ease of development, cost-effectiveness, and DataOps features such as CI/CD, lineage, and unit testing. AWS Glue – AWS Glue is used to load files into Amazon Redshift through the S3 data lake.

Let's personalize your content