Power enterprise-grade Data Vaults with Amazon Redshift – Part 2

AWS Big Data

NOVEMBER 16, 2023

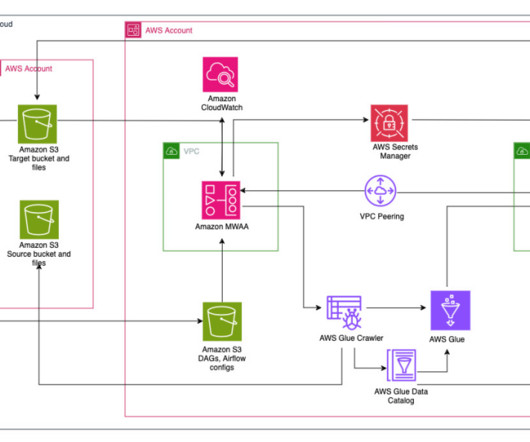

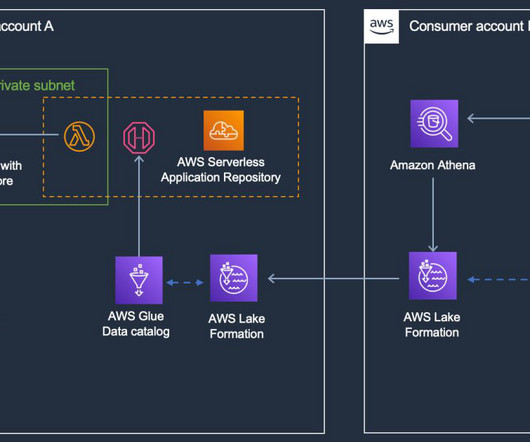

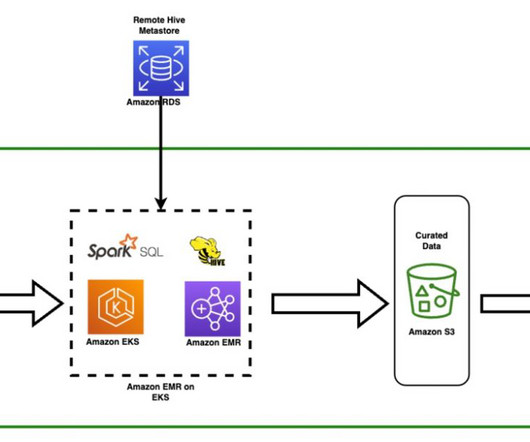

Amazon Redshift is a popular cloud data warehouse, offering a fully managed cloud-based service that seamlessly integrates with an organization’s Amazon Simple Storage Service (Amazon S3) data lake, real-time streams, machine learning (ML) workflows, transactional workflows, and much more—all while providing up to 7.9x

Let's personalize your content