End to End Statistics for Data Science

Analytics Vidhya

OCTOBER 29, 2021

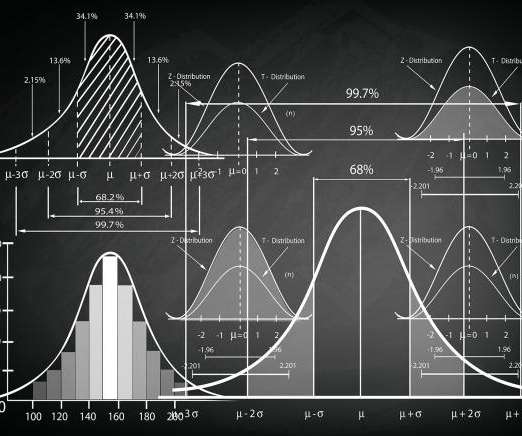

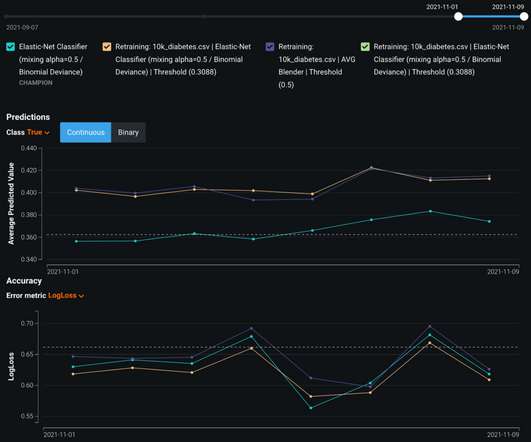

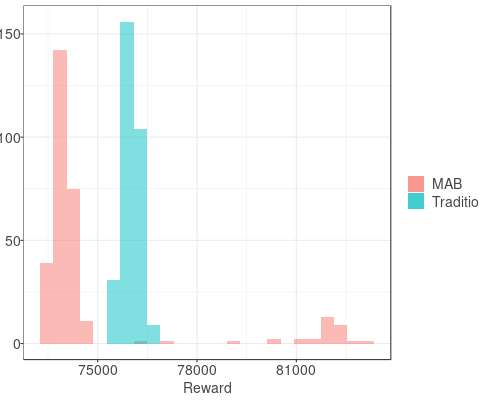

This article was published as a part of the Data Science Blogathon Introduction to Statistics Statistics is a type of mathematical analysis that employs quantified models and representations to analyse a set of experimental data or real-world studies. Data processing is […]. Data processing is […].

Let's personalize your content