What Are the Most Important Steps to Protect Your Organization’s Data?

Smart Data Collective

APRIL 13, 2021

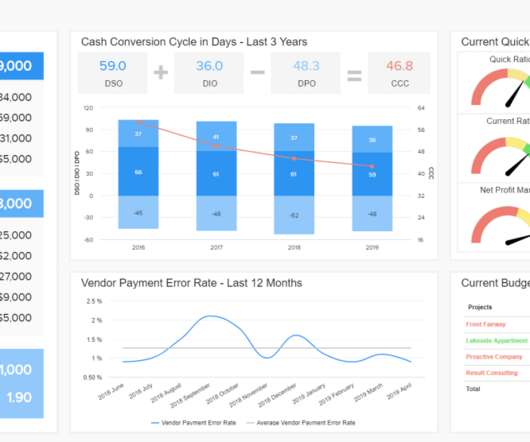

In the modern world of business, data is one of the most important resources for any organization trying to thrive. Business data is highly valuable for cybercriminals. They even go after meta data. Big data can reveal trade secrets, financial information, as well as passwords or access keys to crucial enterprise resources.

Let's personalize your content