What is a DataOps Engineer?

DataKitchen

OCTOBER 5, 2021

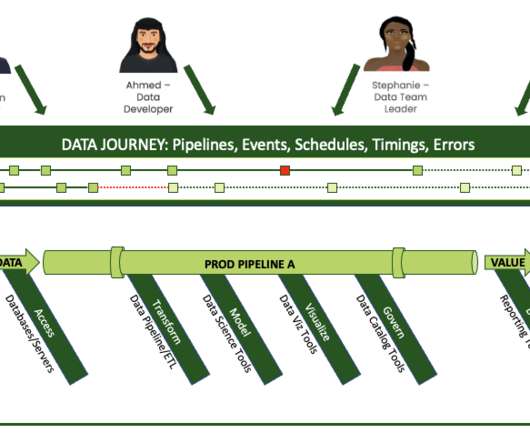

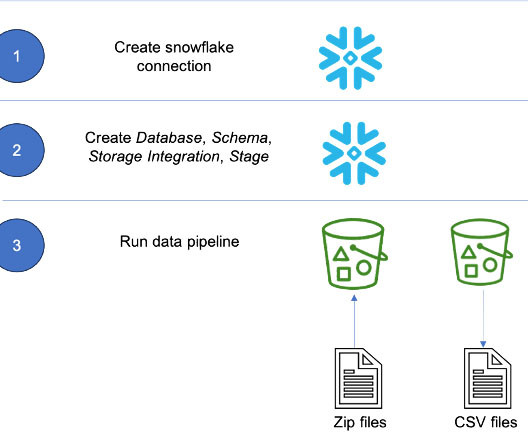

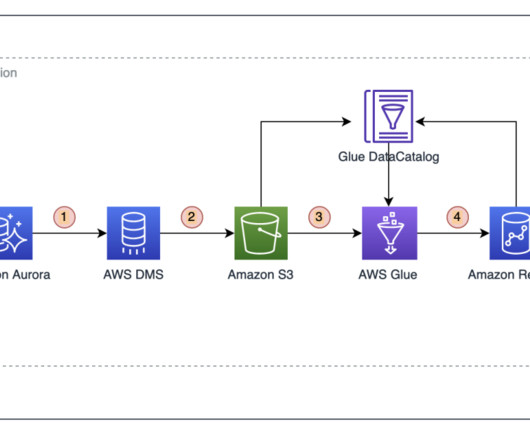

DataOps establishes a process hub that automates data production and analytics development workflows so that the data team is more efficient, innovative and less prone to error. In this blog, we’ll explore the role of the DataOps Engineer in driving the data organization to higher levels of productivity. Create tests.

Let's personalize your content