Data replication holds the key to hybrid cloud effectiveness

CIO Business Intelligence

MARCH 18, 2024

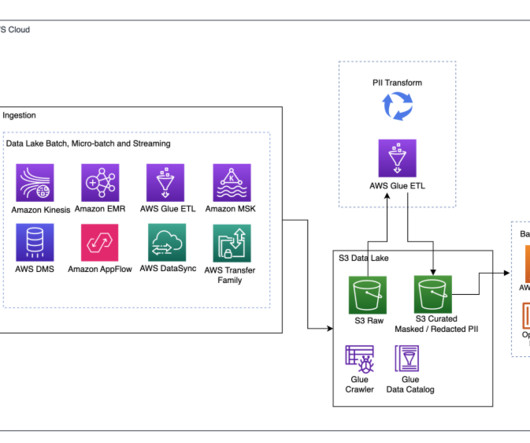

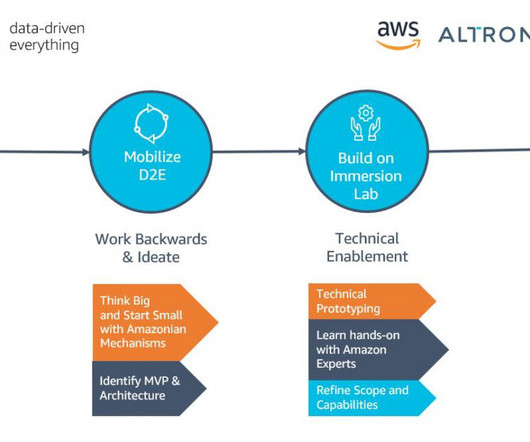

A hybrid cloud approach offers a huge swath of benefits for organizations, from a boost in agility and resiliency to eliminating data siloes and optimizing workloads. There’s more to data than just adopting hybrid cloud. That data also only needs to be replicated once and can then subsequently be applied to multiple targets.

Let's personalize your content