Are You Content with Your Organization’s Content Strategy?

Rocket-Powered Data Science

JULY 6, 2021

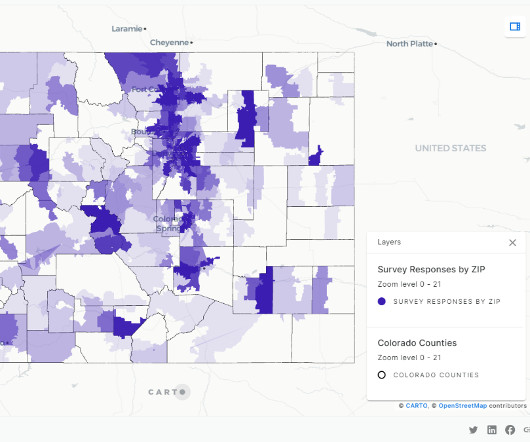

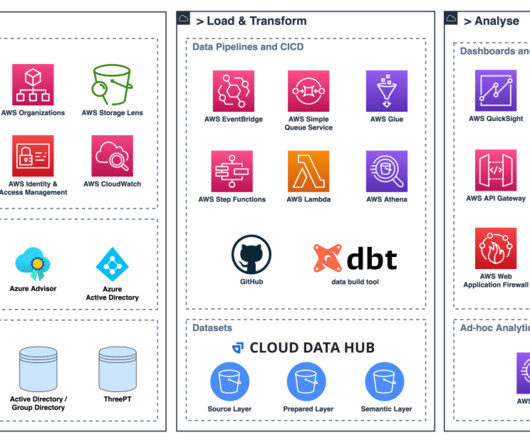

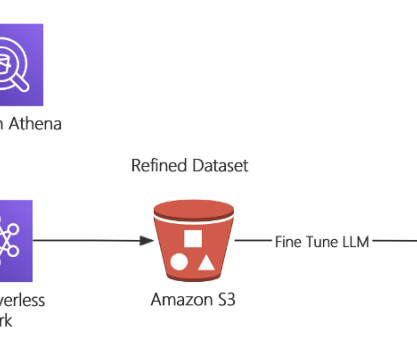

Specifically, in the modern era of massive data collections and exploding content repositories, we can no longer simply rely on keyword searches to be sufficient. This is accomplished through tags, annotations, and metadata (TAM). Data catalogs are very useful and important. Collect, curate, and catalog (i.e.,

Let's personalize your content