Optimization Strategies for Iceberg Tables

Cloudera

FEBRUARY 14, 2024

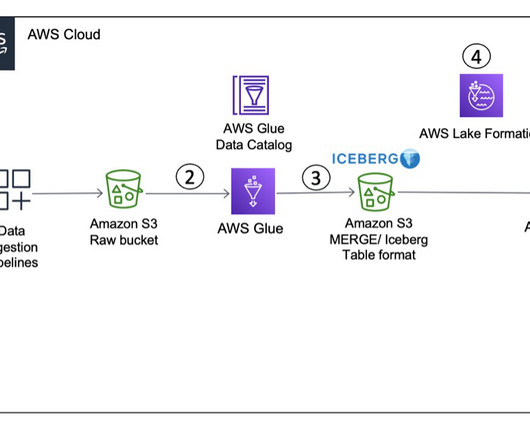

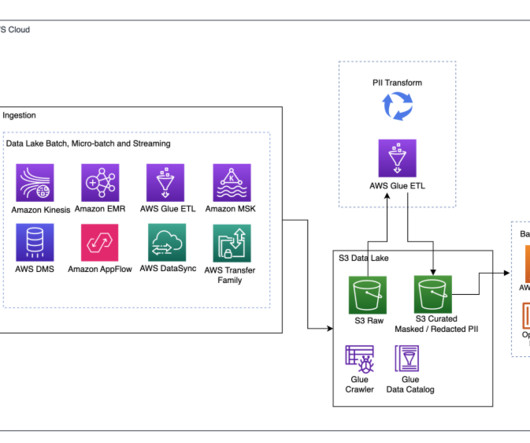

Introduction Apache Iceberg has recently grown in popularity because it adds data warehouse-like capabilities to your data lake making it easier to analyze all your data — structured and unstructured. You can take advantage of a combination of the strategies provided and adapt them to your particular use cases.

Let's personalize your content