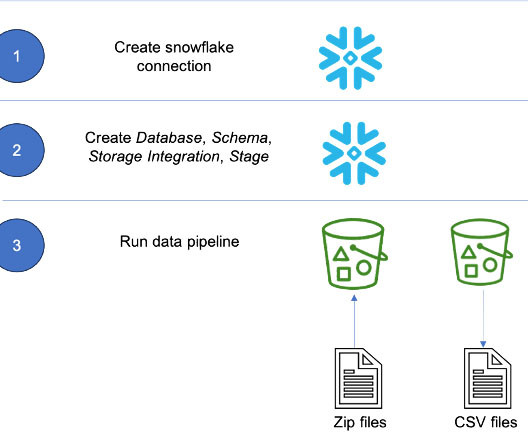

End-to-end development lifecycle for data engineers to build a data integration pipeline using AWS Glue

AWS Big Data

JULY 26, 2023

To grow the power of data at scale for the long term, it’s highly recommended to design an end-to-end development lifecycle for your data integration pipelines. The following are common asks from our customers: Is it possible to develop and test AWS Glue data integration jobs on my local laptop?

Let's personalize your content