Cloudera DataFlow Designer: The Key to Agile Data Pipeline Development

Cloudera

MARCH 14, 2023

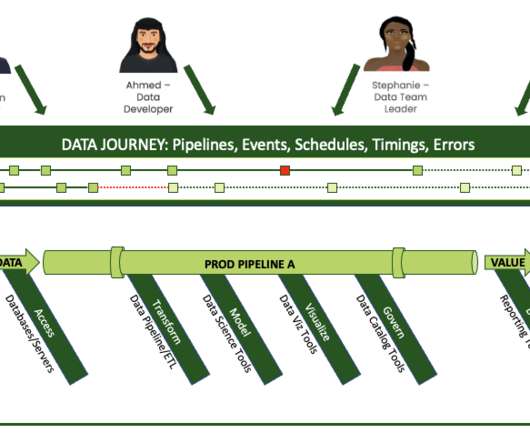

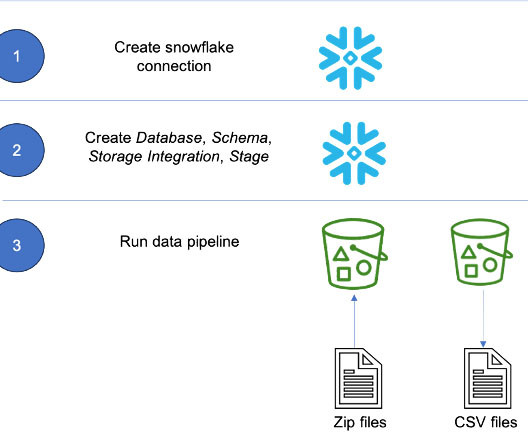

Allows them to iteratively develop processing logic and test with as little overhead as possible. Plays nice with existing CI/CD processes to promote a data pipeline to production. Provides monitoring, alerting, and troubleshooting for production data pipelines.

Let's personalize your content