Successfully conduct a proof of concept in Amazon Redshift

AWS Big Data

MARCH 27, 2024

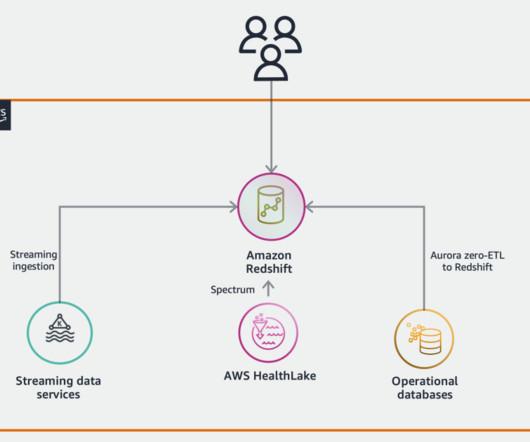

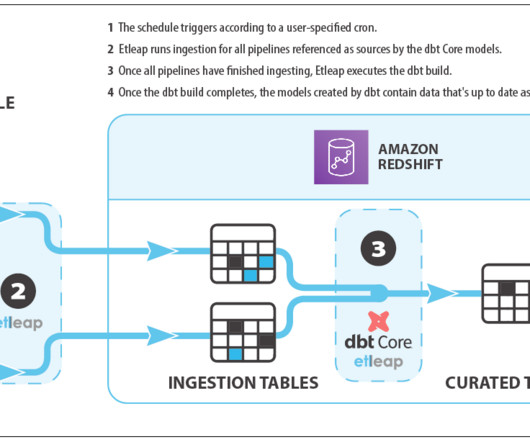

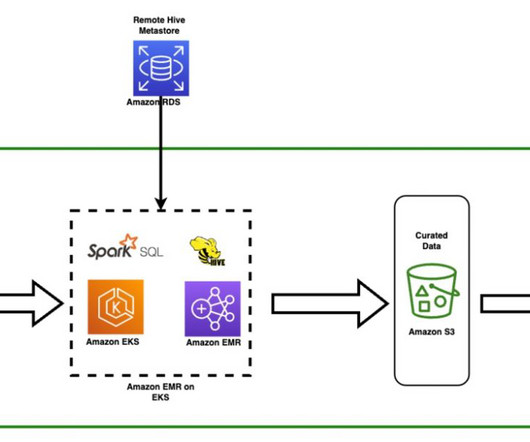

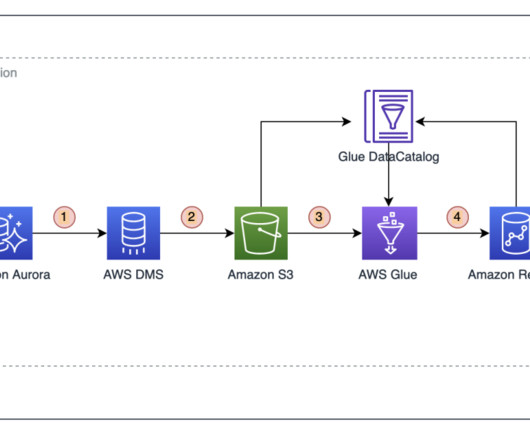

Amazon Redshift is a fast, scalable, and fully managed cloud data warehouse that allows you to process and run your complex SQL analytics workloads on structured and semi-structured data. Complete the implementation tasks such as data ingestion and performance testing.

Let's personalize your content