RLHF For High-Performance Decision-Making: Strategies and Optimization

Analytics Vidhya

SEPTEMBER 11, 2023

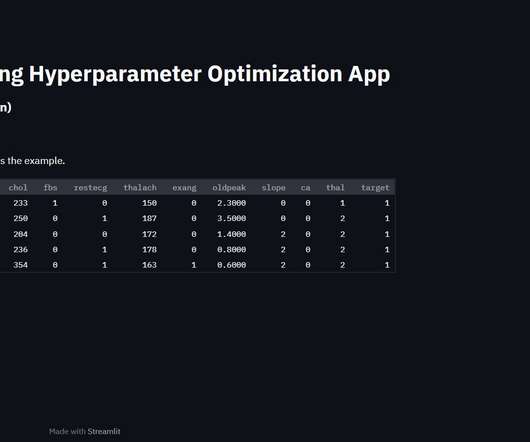

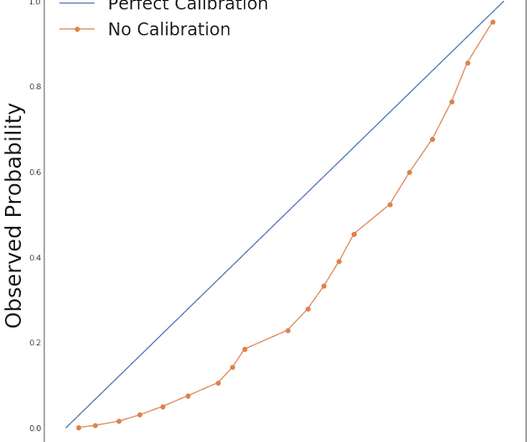

It will be engineered to optimize decision-making and enhance performance in real-world complex systems. Introduction Reinforcement Learning from Human Factors/feedback (RLHF) is an emerging field that combines the principles of RL plus human feedback.

Let's personalize your content