Top 15 Warehouse KPIs & Metrics For Efficient Management

datapine

OCTOBER 11, 2023

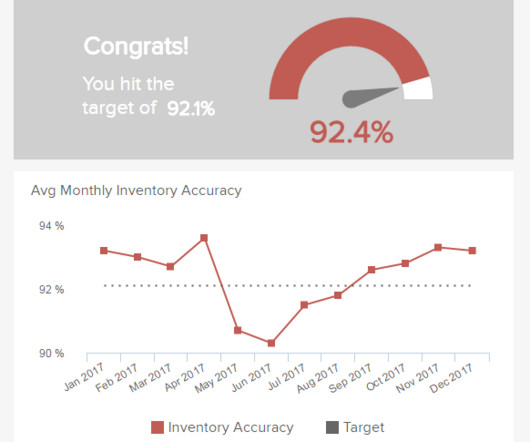

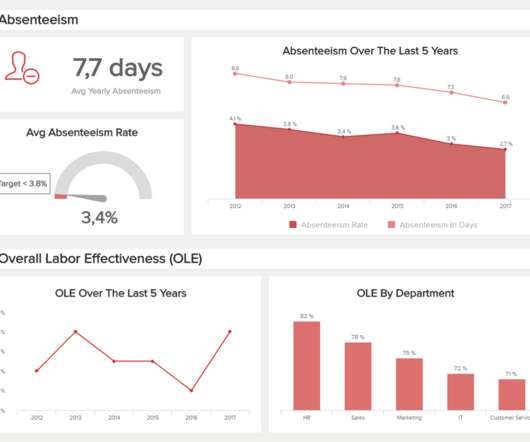

With the help of the right logistics analytics tools, warehouse managers can track powerful metrics and KPIs and extract trends and patterns to ensure everything is running at its maximum potential. Making the use of warehousing metrics a huge competitive advantage. That is where warehouse metrics and KPIs come into play.

Let's personalize your content