Measure performance of AWS Glue Data Quality for ETL pipelines

AWS Big Data

MARCH 12, 2024

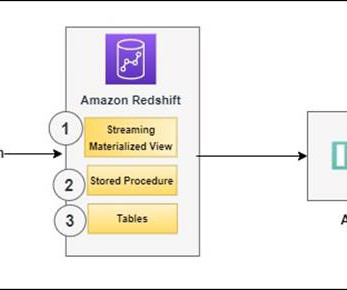

AWS Glue Data Quality is built on DeeQu , an open source tool developed and used at Amazon to calculate data quality metrics and verify data quality constraints and changes in the data distribution so you can focus on describing how data should look instead of implementing algorithms. In the Create job section, choose Visual ETL.x

Let's personalize your content