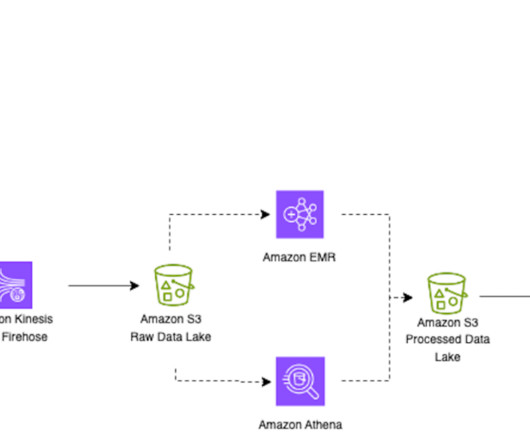

Apache Iceberg optimization: Solving the small files problem in Amazon EMR

AWS Big Data

OCTOBER 3, 2023

Systems of this nature generate a huge number of small objects and need attention to compact them to a more optimal size for faster reading, such as 128 MB, 256 MB, or 512 MB. As of this writing, only the optimize-data optimization is supported. For our testing, we generated about 58,176 small objects with total size of 2 GB.

Let's personalize your content