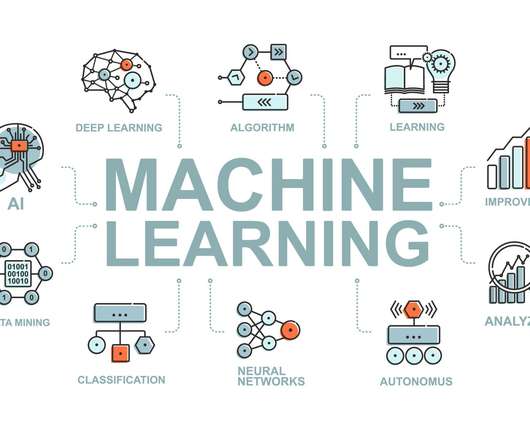

Measuring Fairness in Machine Learning Models

Dataiku

NOVEMBER 11, 2020

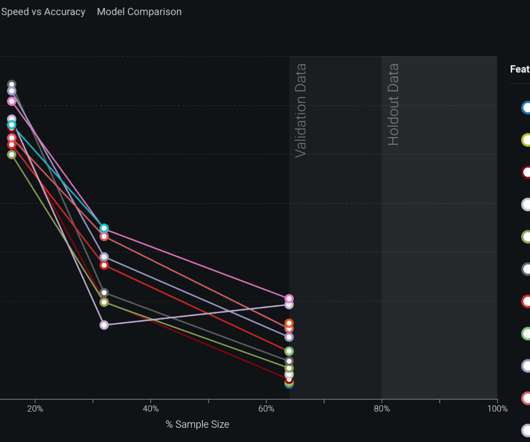

The next step in our fairness journey is to dig into how to detect biased machine learning models. In our previous article , we gave an in-depth review on how to explain biases in data.

Let's personalize your content